1 The Extent of the Problem

DEMO VERSION (NOT FOR PUBLIC DISTRIBUTION)

About

Rigor issues can occur in any step of the research cycle, leading to results that are unreliable, or that can’t even be reproduced by others! Because scientists build on each others’ results, the impacts of unreliable research can become widespread. Although scientists are currently working to understand the breadth of rigor issues, everyone can work on solutions right now. Improving your skills and practices means that you too, can be a part of making science better, every day.

Understand how rigor problems can arise during the research process.

Recognize that everyone is worried about the impacts of unreliable research.

Build community with others who care about improving research.

Can published results be trusted?

Nearly everyone knows of an example of a scientific result that has later been “disproven”.

This has been known to happen even in highly prestigious journals, such as in the case of a 1998 study published in The Lancet, which suggested that the MMR vaccine was a precipitating event for autism. It was later discovered that the lead author had manipulated data, as well as failed to disclose multiple conflicts of interest (Julia Belluz 2019).

Another example is the “nudge” trend in research in the early 2010s, which spawned many headlines, news segments, and TED talks even though the associated effect failed to replicate in real-world settings and some of the most prominent studies behind nudges were based on fabricated data (Nick Fountain et al. 2023).

Although these stories were the result of intentional deception by researchers acting in bad faith, most instances of unreliable results are actually the result of scientists acting in good faith, but lacking training, time, or resources. How can that be?

Let’s consider the following…

Suppose that we have two studies that both rely on the same data, gathered by monitoring 251 school district (~500,000 student, ~99,000 staffers) over the course of 16 weeks with the aim of comparing the difference in COVID spread between 3 foot and 6 foot standards in social distancing rules:

Study #1 No significant difference in COVID spread.

Clinical Infectious Diseases, Volume 73, Issue 10, 15 November 2021, Pages 1871–1878, https://doi.org/10.1093/cid/ciab230

Published: 10 March 2021

Study #2 Significant difference in COVID spread.

Clinical Infectious Diseases, Volume 75, Issue 1, 1 July 2022, Pages e310–e311, https://doi.org/10.1093/cid/ciac187

Published: 05 March 2022

The two studies share the same data, so how come they yielded different results? It turns out that their exlusion criteria are to blame: Study #1 excluded all weeks with <5% student body attendance, while Study #2 excluded all weeks with <10% student body attendance. Though the difference between these two criteria is seemingly arbitrary and minimal, each paper’s findings ultimately hinged on each.

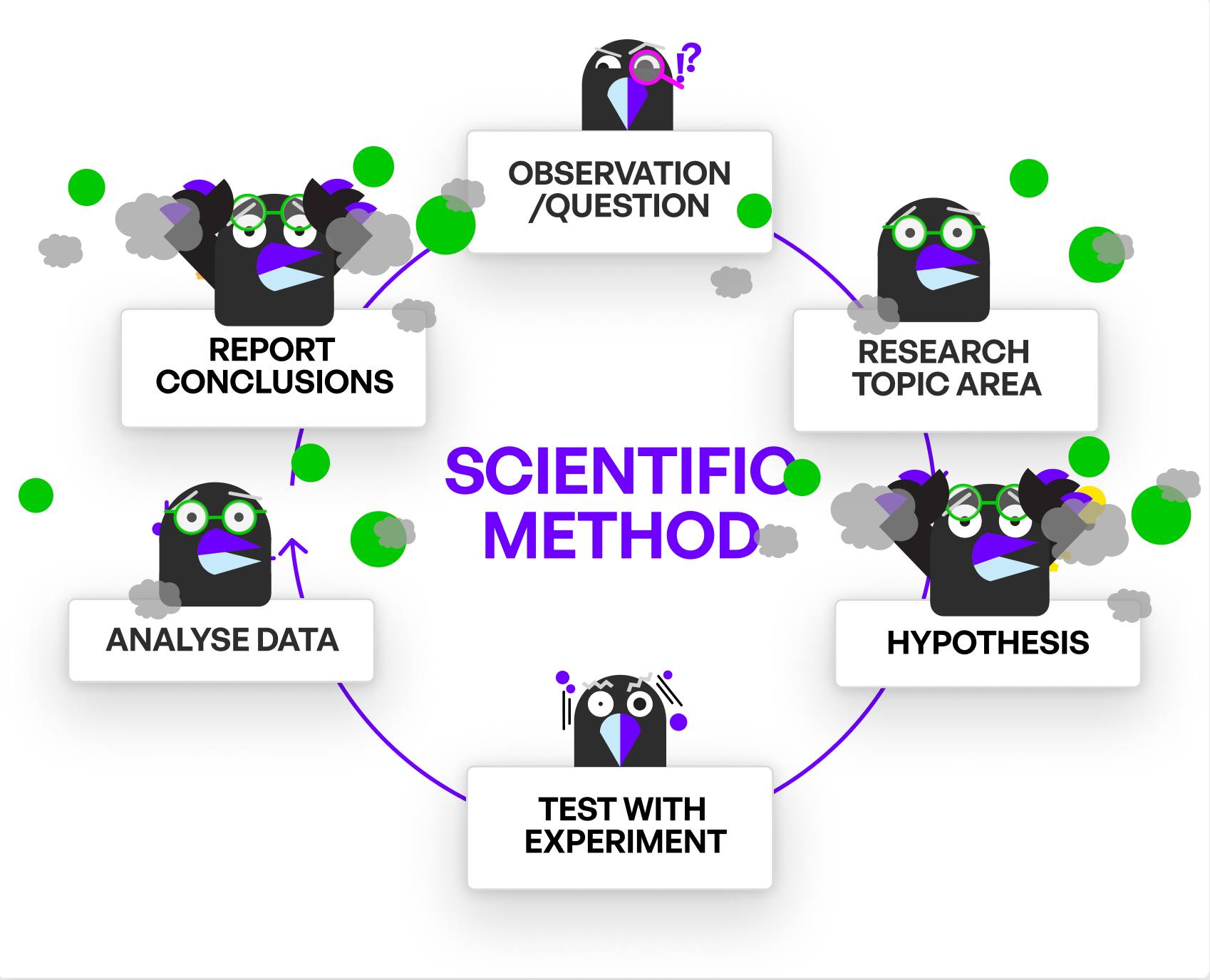

Rigor issues in the research cycle

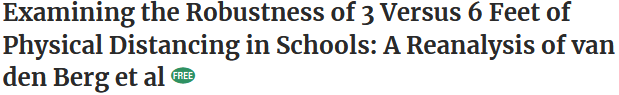

When we think about all the work that goes into a research study, even a simplified diagram of the research cycle has multiple steps; and each one of these steps involves effort and decisions that can affect rigor.

The cumulative effect of these issues (and more) can make for a research system that feels unreliable as a whole, which begs the question – just how widespread are such issues?

How widespread are rigor issues?

We’re scientists, so let’s explore with some data. Make your own estimates about the prevalence of rigor issues and compare with data from a recent survey of Nature readers (Baker 2016).

Estimating reproducibility

While surveys offer insight into expert opinions, large teams of scientists have worked to quantify the reliability of publications:

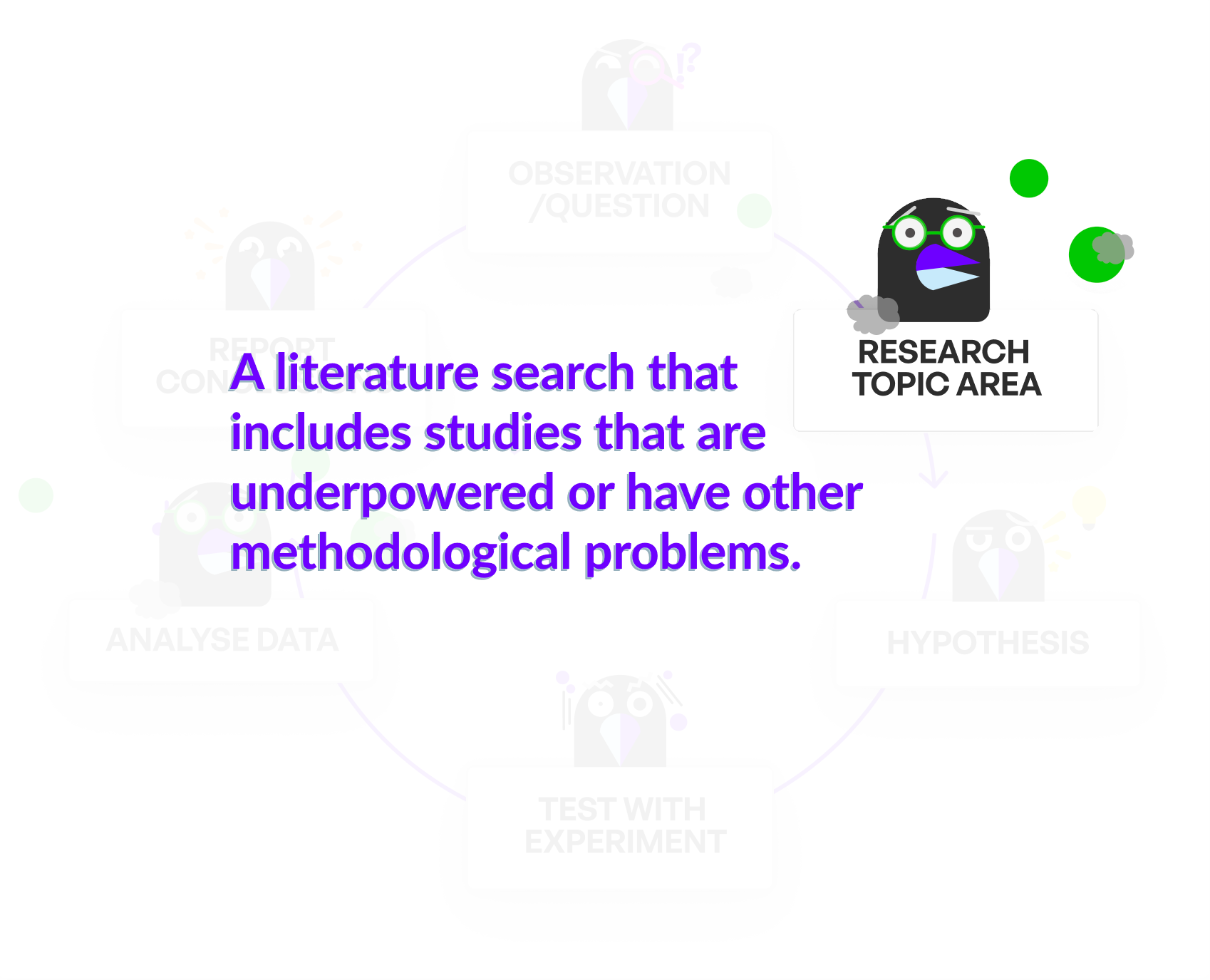

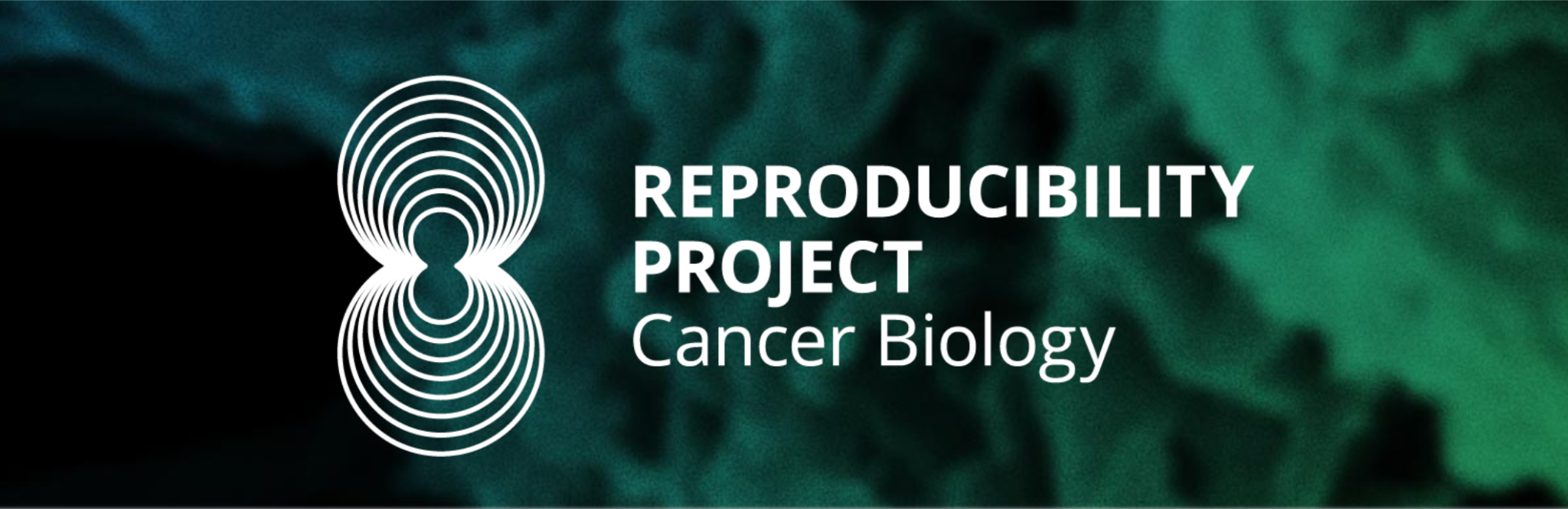

For example, a recent attempt to replicate findings in the field of cancer biology found a number of issues (Center of Open Science 2023):

Although this project is titled “Reproducibility Project”, it is a test of the extent to which published results can be replicated. Our usage of the word “replicate” to describe these efforts follows the definitions established by the National Academies of Sciences, Engineering, and Medicine (2019).

For this report, reproducibility is obtaining consistent results using the same input data; computational steps, methods, and code; and conditions of analysis. This definition is synonymous with “computational reproducibility,” and the terms are used interchangeably in this report.

Replicability is obtaining consistent results across studies aimed at answering the same scientific question, each of which has obtained its own data.

The Human Impact of Rigor

These numbers suggest widespread impact, but what does that look like on a human level? Let’s put a face to this by listening to some real-world stories volunteered by scientists.

What’s next?

It’s understandable (and appropriate) if all of this worries you, but we urge you to take heart in the fact that there good reasons to feel hopeful about the future. For example:

- Academic institutions have begun to recognize the problem, which is the first step to addressing it.

- The scary numbers we just cited only exist because smart people care deeply about fixing this.

- In taking this unit right now, you have taken the first step towards becoming part of the solution.

Takeaways

- Unreliable research can result from rigor issues at any stage in the research cycle from an initial idea to publication.

- Scientists are concerned about the widespread impacts of unreliable research.

- Many efforts are underway to measure the problem and work on solutions – including you taking this unit right now!

![2% [of] experiments [have] open data; 70% of [replication] experiments required [us] asking for key reagents; 69% of experiments [needed] a key reagent [that] original authors were willing to share; 0% of protocols [were] completely described; in 32% of experiments the original authors were not helpful (or unresponsive); in 41% of experiments the original authors were very helpful.](images/COS_stats_cancer-reproducibility.png)